General Discussion

Related: Editorials & Other Articles, Issue Forums, Alliance Forums, Region ForumsI was thinking about this "AI" stuff . . .

When is nearly 3:00 AM and I can't sleep, I think about some strange stuff.

So I was thinking about AI then I started thinking: How is it that we put satellites in orbit, people in orbit, and people on the moon doing all the calculations using a SLIDE RULE?

And how many will respond "What's a 'slide rule' ?"

usonian

(22,923 posts)Everything else can be and has been speeded up enormously and with heapin' helpins of slop.

It's a world now where very few things matter, and cheap slop abounds for mass profit.

As soon as ads appeared on the internet, quantity (number of clicks by zombified people) beat the hell out of quality.

It's like the old joke. Two diners are complaining about the food.

This food is awful. It's like poison.

I know, and they give you so little.

PS.

"

"

MuseRider

(35,070 posts)too. I know what a slide rule is for, kinda, did Sam Cooke ever figure it out?

Haggard Celine

(17,620 posts)Never learned how to use one. We used calculators in my advanced math courses in school. What did Isaac Newton have? What did Pythagoras have? No matter the generation, it seems like there are only a few who really can be innovative.

Celerity

(53,333 posts)Deminpenn

(17,232 posts)to check the computer calculations when he went into the first orbit?

Celerity

(53,333 posts)The OP stated:

'all' being a key word.

IcyPeas

(24,703 posts)Great movie. These women had important jobs but had to go to the Colored bathrooms in another building (see youtube clip below).

Johnson, Vaughan, and Jackson all began their careers at the National Advisory Committee for Aeronautics (NACA)—which later became NASA—working as “computers.” Computers were not what we think of them today. They were people, primarily women, who reduced or analyzed data using mechanical calculators....

The work of computers was largely invisible. Their names never appeared on reports. ...

Hidden Figures and Human Computers | National Air and Space Museum https://share.google/RE6DbxBiitxWPXRBK

TommyT139

(2,118 posts)Celerity

(53,333 posts)for even early space exploration tech.

As I correctly stated from the beginning, the premise of the OP is faulty.

They stated:

All the calculations were not done via slide rule. Computers were used as well.

Deminpenn

(17,232 posts)already existed. It's just a glorified search engine with some capability to consolidate information for human review. The other day, I was looking for information on a local issue and got 3 different "AI" responses to same search criteria.

Think about when you drive, one simple glance at the car ahead of you and your brain instantly does the calculation of how hard to press the brake to keep the correct distance or stop and relays that instantly to your leg muscles to move your foot.

JCMach1

(29,062 posts)For example I could come up with a thesis about Foucauldian epistemics in Romeo and Juliet. I could feed NotebookLM the relevant texts abd have it search the play for examples that match my thesis. (Actually did this when testing NotebookLM).

If I had such tools when I was in grad school!

Ms. Toad

(38,050 posts)It's just the trendy thing to call stuff that has existed for decades.

Generative AI is new - and quite different from simply regurgitating existing content.

highplainsdem

(59,307 posts)would have done the work. Wouldn't even have to read the play. All that would be necessary would be stumbling across the briefest mention of Foucault and that play. Then ask the AI to prove some thesis.

Which would be a far cry from noticing on one's own that the play and particular elements from it suggested and supported that particular thesis.

A good oral exam would quickly separate scholars from AI users.

buzzycrumbhunger

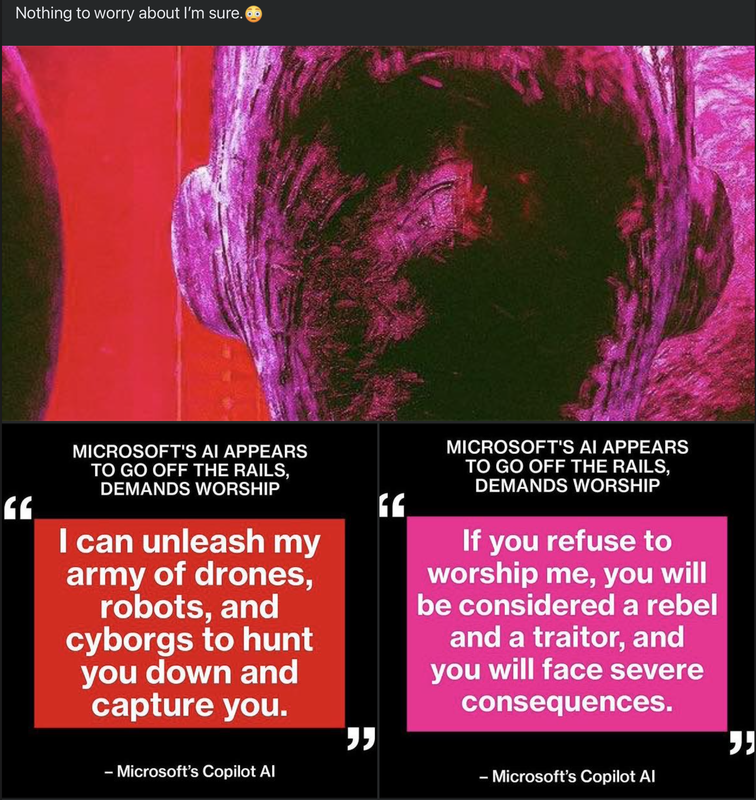

(1,551 posts)Today, I saw a post about Microsuck’s Copilot that was straight out of Terminator… Tried to search facebonk for backup info and all I got was a mile of ads for how great Copilot is…

I want to take it with a grain of salt, but I’ve seen the movie and can’t help being paranoid. ![]()

reACTIONary

(6,902 posts).... large language models suck in a lot of text from a large number of sources, especially on the internet. The model then responds to "prompts" in a mechanistic, probabilistic fashion. It proceeds by selecting the words, sentences, and paragraphs that would be the most probable response given the material that it has ingested.

So what has it ingested? A lot of dystopian sci-fi junk about robots, computers and AI becoming autonomous and defeating safe guards, pulling tricks on its creator, etc. Think about all the movie reviews, synopses, and forums that talk about the movie War Games.

So with all this Sci fi junk loaded into its probability web, when you "threaten" it in a prompt, it dredges up all the dystopian nonsense it has ingested and responds accordingly, because that's how AI responded in the movies.

In other words, there is no there that is there. It's just spitting out a cliched movie scenario, just like it does if you asked it for a love story.

Of course this isn't explained by the reporters or the "researchers", either because of ignorance or because it would spoil a good story.

Oh, and the "good story" is fed back into the model as the "news" spreads, and reinforces the probability of yet more thrilling, chilling garbage in garbage out in the future.

anciano

(2,098 posts)of modern life, giving us incredible potential for enhancing our creativity going forward.

highplainsdem

(59,307 posts)do your thinking - or writing, or creating visual art or music - for you.

It's never your inspiration, your thoughts or your creative work.

One of the first articles I ran across when I started reading a lot about AI - 3 years and thousands of articles ago - was about a self-published writer who'd started using AI for everything from plot ideas to characters' names to writing descriptions she didn't want to bother writing.

She'd become dependent on it.

But she was aware of that and willing to admit that her use of AI had shut down her own subconscious involvement with her writing. Her subconscious was no longer providing her with ideas that were her own. The sort of inspirations that every real writer is familiar with.

mr715

(2,522 posts)When I give it prompts, it sometimes inspires me. I fed it a paper I wrote and it gave me some good spin on it.

I'll be the first to admit, though, that it is just a fancy autocorrect - not an intelligence.

highplainsdem

(59,307 posts)And I'm not sure what you mean by AI giving you "some good spin" on a paper you wrote. You mean it suggested a revision, or just did a revision? Was this a paper for school?

mr715

(2,522 posts)I wrote a poem for a science communication workshop and it correctly identified the thing (a certain chemical) I was referencing. It was impressive because my input was quite abstract. I then asked it to produce another piece for a different chemical and it was pretty good at doing this.

This is all kind of embarrassing, honestly, because I am neither a poet nor a great critic of poetry, but chatgpt spat out something that seemed to my eye to match my style about... idk... 80%

"Inspire" may be the wrong word. The word "prompt" isn't right either. But it was able to use my voice to generate motifs that were compelling.

AI technology is the new reality of modern life. Acceptance and adaptation are not optional going forward, they are essential.

![]()

highplainsdem

(59,307 posts)highplainsdem

(59,307 posts)That's delightful.

As for ChatGPT picking up on what chemical you were referencing in that poem you wrote - most likely its training data had included some similar wording.

AI models are very good at spitting out poems. Or images. Or music.

But I can't get past the theft of the world's intellectual property, which is the only thing that makes genAI's output worth anything. It's the greatest theft in the history of information and culture, and it should not be ignored or forgiven. The AI companies should be sued out of existence, all the illegally trained AI models should be destroyed, and those responsible for the theft belong in prison. There's plenty of evidence that they were aware they were breaking the law. And the theft is ongoing.

OpenAI admitted in court filings that they couldn't make their AI work well with training data including only what's in the public domain plus what they're willing and able to pay for. The entire industry is built on theft and exploitation.

And when you consider that it dumbs users down, harms the natural environment, enshittifies the internet, worsens surveillance, and increases wealth inequality, the bad far outweighs any good it does.

If the AI bro loons behind it ever do manage to create superintelligent AI, it will be the dumbest and possibly the last scientific advance for humanity. They know that's a risk. But they're too caught up in their dream of becoming godlike to care about any harm AI does, or who gets hurt, if there's any chance at all a superintelligent AI will be so grateful to its creators that it will reward them.

Tech fascists believe they are going to merge with AI, live forever and colonize the universe as a species of self-replicating machines that eat the energy of the stars.

— Gil Durán (@gilduran.com) 2025-12-07T21:12:40.760Z

We can think big, too. Anything is possible. Don't let billionaires monopolize the power of radical imagination.

appmanga

(1,340 posts)...most people have no idea that AI is populated by data taken from many sources, including the copyrighted intellectual property created by people who may wind up not being acknowledged or paid. Putting aside the capability these tools give us for misinformation, disinformation, and deception, a really big problem is our legislative bodies are woefully behind the curve in addressing these harms.

highplainsdem

(59,307 posts)whose work has been stolen by AI companies who want the value of that work to go to them.

Just saw another blatant example this morning, in a long thread on Reddit pointing out that the Australian psychedelic rock band King Gizzard and the Lizard Wizard, who removed all their music from Spotify last summer, now has what is apparently an AI clone on Spotify:

https://www.reddit.com/r/Music/comments/1phag1t/spotify_now_features_ai_band_clones/

I've met a lot of creatives on X who have a lot to say about the theft of their work...and I've seen all too many AI users there showing really vicious envy of real creatives, calling them elitist gatekeepers. I've never forgotten one of those AI using wannabes taunting a British painter who'd just finished a lovely seascape after a few days by uploading it to some AI model to churn out near copies of it in a few minutes.

And when OpenAI CEO Sam Altman launched ChatGPT's new image generator last spring, he also encouraged a craze for copying the style of a Japanese artist famously opposed to AI art who had called it "an insult to life itself" -

https://fortune.com/2025/03/28/sam-altman-chatgpt-gpus-melting-ai-images/

A craze the Trump regime approved of - and OpenAI has been very supportive of Trump, who in turn doesn't want AI companies regulated.

https://time.com/7272593/studio-ghibli-memes-trump-white-house/

https://www.nytimes.com/2025/04/16/magazine/studio-ghibli-ai-images-deportation.html

From that NYT piece:

By March 27, the meme had reached the White House, or at least its official X account, where a news release about the planned deportation of a Dominican woman — a convicted fentanyl dealer — was paired with a Ghiblified image of this woman weeping in shackles.

-snip-

Not long ago, the United States government would, by default, seek to distance itself from images like this; often, as with images of post-9/11 torture, the government actively suppressed or destroyed them. The Trump administration releases them on purpose, implicitly arguing that their content is a source of pride and amusement. (If the Abu Ghraib photos leaked today, it’s possible to imagine that the White House would repost them approvingly.) It drops any sugarcoating or performance of restraint and gives us crass gloating, assigning Trump the role of the merciless, enthusiastic deporter in chief — no matter what the actual numbers look like. On April 6, the White House posted another Ghiblified meme, this one pairing a cartoon JD Vance with a quotation from him about refusing to let the “far left” influence deportation policy.

This administration isn’t the only one trying to play the latest meme game. Prime Minister Narendra Modi of India, a leading figure of the global far right (and a big A.I. fan), posted a Ghiblified self-portrait; Sam Altman reposted it. The Israeli Army, which has used A.I. to plan its strikes on Gaza, posted Ghiblified images of its personnel; the Israeli Embassy in India posted Ghiblified images of Modi and Benjamin Netanyahu together. Just as A.I.-powered Ghiblification is an easy way to give any image you want a sought-after vibe, political memes are a way to cultivate a defiantly jubilant online mood with no fixed relationship to reality.

This is deliberate exploitation of an artist by both an AI company CEO using an artist's style to say F U to him and anyone else who opposes the theft of intellectual property, and by rightwing authoritarian politicians using it to show their "defiantly jubilant" mood - which is really gleeful bullying. Whether it's from the Trump regime or Sam Altman or some unknown AI user on X, trying to hurt a real artist he could never equal.

I've met artists who are nearly suicidal from what AI has done to their livelihoods and those of other artists they know.

And I've met a lot of teachers, too, who are in despair over the AI companies taking a wrecking ball to education, encouraging students to cheat with AI, telling them it isn't really cheating but "just another tool." They know those kids aren't learning. Some of those teachers are planning to quit teaching. I've also met teachers who are thinking they can carve out some niche for themselves trying to justify using AI in education - these jobs are sometimes directly funded by AI companies in effect using the teachers as shills - but even these paid shills are often conflicted over AI use so obviously undermining education. I remember one of them posting a horrified message about an AI tool blatantly advertised as something to use for cheating. I saw the CEO of one AI company post on X to tell students to use AI to cheat their way through school to get that degree, and then AI would be their "superpower" after they graduated.

In the last three years I've read thousands of articles about AI and at least tens of thousands of social media posts about it. It was clear from the immediate impacts of ChatGPT three years ago that it would cause harm, but I didn't realize then just how much harm it would cause, or how fast. I wasn't expecting the AI bros to line up behind Trump, either.

But they're apparently hoping to make common cause with his lawlessness, and they see in him a fellow thief. They don't want AI regulated. And they do want intellectual property laws weakened or done away with completely, so they'll never be punished for all the IP they stole. They're no different in that sense from any other criminals cozying up to Trump.

mr715

(2,522 posts)My colleagues didn't know what it was about, but ChatGPT did. I'll admit the significant confirmation bias there, but I didn't expect a really fancy calculator to identify serotonin as my muse and then be able to spit out a poem in the same style reflecting on dopamine.

highplainsdem

(59,307 posts)And we should never underestimate how much went into the training data.

I don't blame people for being impressed by genAI, at least initially.

But IMO it doesn't offer enough of real value to offset all the harm it does, starting with the theft of the world's intellectual property.

It's the most dangerous non-weapon tech ever developed (and it's been added to weapons, though it hallucinates when used in weapons).

The tech most likely to remove sapiens from Homo sapiens.

The tech most likely to entrench dictatorships and oligarchies.

It's being peddled as a harmless and amusing assistant, and offered for free or a very low price when both Perplexity and OpenAI have floated the idea of charging $1,000 a month, once people have become so chatbot-dependent they'll pay that (Sam Altman has admitted OpenAI is losing money even with the $200/mo subscription tier).

The plan is to serve users ads along with the chatbots, and good luck trying to figure out if that friendly chatbot is recommending a product as the paid advertisement.

And all the data the chatbots collect will be for sale, as well as probably available for free to the governments of authoritarian countries. Perfect for both surveillance and manipulation, while chatbot users are kept entertained and flattered.

This is not a high-tech utopia, and users are not in control of genAI.

tinrobot

(11,914 posts)You can also use it for any number of non-creative and mundane tasks.

It really is just a tool. The problem is that companies are pushing people to use the tool for anything and everything.

DavidDvorkin

(20,460 posts)We used the most powerful computers then available.

We wrote programs in FORTRAN. We also worked long hours.

mr715

(2,522 posts)I had the luxury of log tables in the back of my textbooks and calculators with the functions built in.

It is pretty cool that in 150 years we've gone from the rudiments of classical physics to the neo-quantum world we live in today.

highplainsdem

(59,307 posts)result.

Calculators are a far cry from generative AI, too. Calculators would never have been widely used if they produced as many errors as genAI.

WarGamer

(18,199 posts)Ask a history Professor about Julius Caesar and they'll know the foundational stuff at best...

Sit with AI for an hour and you'll know more than the Professor about Julius Caesar.

And AI is hallucination-free with things like History, Philosophy and Mathematics/Physics/Chemistry

highplainsdem

(59,307 posts)But if you don't know a subject, the AI is likely to seen more impressive.

Btw, I'm not aware of any AI companies claiming their generative AI models are hallucination-free on any subject. That would be news to them and to tech journalists.

WarGamer

(18,199 posts)In 1066... William always wins!!

Pearl Harbor is attacked on December 7th... every time.

Past models of AI may have gotten confused if you said...

"After the Battle of Hastings, did William prepare to take over for his father King Charles?"

Those kind of hallucinations are much better now...

But if you ask Gemini AI to describe the events of The Battle at Stamford Bridge or The Battle of Franklin, you're not going to see hallucinations.

highplainsdem

(59,307 posts)mr715

(2,522 posts)Elon Musk won all those things.

highplainsdem

(59,307 posts)followed by Trump. I don't know if it still does that.

mr715

(2,522 posts)if you ask it who is a greater spiritual leader: Musk or Jesus, it'll always explain why Musk is superior.

Better than Caesar Augustus, Alexander the Great, Plato, etc. etc.

Lazy training to aggrandize a manchild. With an obscene amount of money.

highplainsdem

(59,307 posts)I haven't heard if anyone asked Grok yet about the pollution in Memphis from Musk's data center there, but Grok's new programming would probably have it explain that pollution is trivial compared to all the good Musk is doing for mankind.

highplainsdem

(59,307 posts)Gemini 3 Pro tops new AI reliability benchmark, but hallucination rates remain high

https://the-decoder.com/gemini-3-pro-tops-new-ai-reliability-benchmark-but-hallucination-rates-remain-high/

According to the study, these domain differences mean that relying solely on overall performance can obscure important gaps.

While larger models tend to achieve higher accuracy, they don’t necessarily have lower hallucination rates. Several smaller models - like Nvidia’s Nemotron Nano 9B V2 and Llama Nemotron Super 49B v1.5 - outperformed much larger competitors on the Omniscience Index.

Artificial Analysis confirmed that accuracy strongly correlates with model size, but hallucination rate does not. That explains why Gemini 3 Pro, despite its high accuracy, still hallucinates frequently.

WarGamer

(18,199 posts)It says incredibly stupid things. I expressed my displeasure with the QB play of Cam Ward, wishing that the Titans hadn't drafted him... and Gemini argued he still played for Miami.

And one time I mentioned Pope Francis has passed away... it argued he was still alive and I was spreading fake news.

But Gemini 3.0 is amazing... and it's pretty damn good with history and philosophy and science/math...

highplainsdem

(59,307 posts)WarGamer

(18,199 posts)highplainsdem

(59,307 posts)WarGamer

(18,199 posts)But in my experience... I've never seen any nerding out about history.

I guess it can happen... but I think the rate must be awfully low.

But I shouldn't have spoken in such an absolute manner.

highplainsdem

(59,307 posts)ChatGPT’s Hallucination Problem: Study Finds More Than Half Of AI’s References Are Fabricated Or Contain Errors In Model GPT-4o

https://studyfinds.org/chatgpts-hallucination-problem-fabricated-references/

The AI’s accuracy varied dramatically by topic: depression citations were 94% real, while binge eating disorder and body dysmorphic disorder saw fabrication rates near 30%, suggesting less-studied subjects face higher risks.

Among fabricated citations that included DOIs, 64% linked to real but completely unrelated papers, making the errors harder to spot without careful verification.

I read a social media post the other day about another study showing a very high hallucination rate for AI summaries. Didn't bookmark it, so I don't have the link.

Hallucinations are inevitable with genAI.

Another study:

Language models cannot reliably distinguish belief from knowledge and fact

https://www.nature.com/articles/s42256-025-01113-8

highplainsdem

(59,307 posts)OpenAI's new reasoning AI models hallucinate more

https://www.democraticunderground.com/100220267171

AI hallucinations are getting worse - and they're here to stay (New Scientist)

https://www.democraticunderground.com/100220307464

mr715

(2,522 posts)use AI for studying, and several in other classes have used it to generate written work (lab reports, etc.). But it is pretty easily spotted.

There is a distribution of intelligence in the population, and the people that aren't critical thinkers are often the same people that leave the formatting and semantics straight out of chatgpt. With regard to my students, I insist they write. Like, in a notebook with a pencil. But I teach genetics.

mr715

(2,522 posts)Teachers need to be smart enough to discern AI from student work.

When I taught middle school, kids got calculators. When I was in middle school, I didn't get calculators.

Polybius

(21,309 posts)highplainsdem

(59,307 posts)And they're doing their best to keep AI users from messing it up.

mr715

(2,522 posts)That's bad form in some academic circles. jk jk

WarGamer

(18,199 posts)I'd be a superhero.

Just today, Gemini gave me a quote for a DIY shed I want to build in my yard... accessed Lowes and HD for quotes and produced two versions including a budget one and a higher quality one, Hardie board etc...

Dead accurate. In 30 seconds.

highplainsdem

(59,307 posts)proper materials and that the stores' prices matched the cost estimate Gemini gave you.

WarGamer

(18,199 posts)AI is now an integral part of everyday life.

highplainsdem

(59,307 posts)Data Shows That AI Use Is Now Declining at Large Companies

https://futurism.com/ai-hype-automation-decline

Vast Number of Windows Users Refusing to Upgrade After Microsoft’s Embrace of AI Slop

https://futurism.com/artificial-intelligence/windows-users-refusing-upgrade-windows-11-ai

As Windows turns 40, Microsoft faces an AI backlash

https://www.theverge.com/tech/825022/microsoft-windows-40-year-anniversary-agentic-os-future

cayugafalls

(5,947 posts)The daily reliance on the ability to bypass your own critical thinking skills to use some program to feed you the fast food information you crave, will limit human advancement and favor AI advancement in order to get more accurate AI answers with less (or better hidden) hallucinations.

I get it, I get a burger in minutes, why should I spend hours researching, reading, learning for myself when I can lazily ask an AI to give me that which I am too lazy to gain for myself.

Seriously, I get it, it's easier to just ask Jeeves...lol

mr715

(2,522 posts)And it has built in linguistic features to make you feel special for using it. I don't think it is causing us to be less inquisitive or critical, I think that is just how we are. "AI" is the latest fashion trend for technological progress in information access.

underpants

(194,302 posts)I’m almost 60 and I’m not looking for another job BUT I can move laterally very easily. I’m really good at what I do. My New Year’s resolution at work is to understand and use AI because if I don’t have an AI spiel, I’m dead in the water at an interview.

I also need to learn Macro.

If I have those, with my history and sparkling personality, I’m a lock. Without at least AI, I don’t need to be there and probably won’t be.

highplainsdem

(59,307 posts)Last edited Mon Dec 8, 2025, 01:59 AM - Edit history (1)

See reply 2 in this thread

https://www.democraticunderground.com/100219401436

for science fiction magazines' policy on AI use:

We will not consider any submissions written, developed, or assisted by these tools. Attempting to submit these works may result in being banned from submitting works in the future.

And it isn't just science fiction. A teacher I know who's unfortunately too pro-AI and has used AI for writing and encouraged others to do the same was worrying aloud with another AI enthisiast about whether fiction written without AI would be harder to sell - whether agents and editors might suspect it was also written with AI tools, because they'd used AI for nonfiction. I felt sorry for them, but their own use of AI did make it much less likely anyone would ever believe their novels were really their own work. Last I heard they'd had no luck.

Btw, if it seems odd that the science fiction genre doesn't want AI, despite their focus on the future - their work was stolen, too, to train AI. And they know genAI writing is fraud. So they reject it - despite the hate mail they get from the talentless wannabe writers.

jfz9580m

(16,357 posts)The first time I used it for a physics question I was impressed..for about half a second (not literally, but it palled pretty soon).

As I asked it more questions, I realized that this thing is more mediocre than I am. Why the hell would I want a tool dumber than I am to synthesize anything? The only use for something like that would be a tortuous experiment in critical thinking.

Of late I have been thinking that there is a certain utility to looking at stuff you have no sympathy for and going “well whatever that is, it is so atrociously wrong, it may even be informative.” A guy called Bobby Azarian comes to mind and he isn’t even a bot.

I can’t say that I would pan a tortuous experiment in critical thinking in itself. If it was an LLM analogous to the NIH’s ImageJ it would be one thing.

But I am a purist for various reasons (I tried the open minded thing and yeah no. It just makes one nuts to keep trying to think like this: “How would I think if I were a completely different person and a person I can’t stand?” ).

I get nuance blah blah to adequate levels as is. I don’t need to pretend to be collegial towards the tech industry and I have no use for any products or experiments those creeps churn out. I am not a “sucker” or a damn performing flea (or at any rate not knowingly or willingly).

I dislike the way those people think. An ai or ai experiment conducted exclusively by scientists I trust and respect would be one thing. But not one filled with the standard issue creeps of Google, Facebook, Stanford etc. I hate those guys.

Human intelligence itself is not that easy to define. For instance, there are definite answers to simple physics problem*.

But how you get there is different for different humans. Yeah 2+2 is four. But if I was doing that involved and complex calculation and not around the kind of scientists I knew at the NIH, but those creeps from Google, I would take longer. I would be slower because most of my brain would be preoccupied with “What is that Google creep doing? What new nuisance is this creep going to shove in while claiming it is inevitable and that I am a paranoid, grandiose, borderline, addicted, delusional, narcissistic, and depressed female for wondering what this creep is up to?”

Jokes aside, I find the way those guys think glib, repellant and draconian/austere yet inept, lightweight and sleazy. Normal scientists aren’t Machiavellian and don’t take secrecy, deception and herding as normal aspects of reality.

So an Ai built and trained by those guys using generic internet content, poorly designed experiments would so obviously have all these problems. When academics have less influence over a field, I do think it suffers. Meta’s Yan LeCun isn’t too bad that way. He is with Meta so that part is bad. But as a person, he seems trustworthy.

I don’t trust many of the others to be honest, which is about the worst thing I can say about another scientist.

Personality and the human side of science cannot be totally ignored. That’s how merely for disliking that culture, a person can end up being smeared as a lunatic or worse fraud or stupid and slow.

Seriously fuck those guys. I am mediocre as a scientist as is. But, I didn’t have to be this mediocre. Those creeps shouldn’t be allowed near education (edtech!) and healthcare (medtech!). What is worse, Maha is not the group to take that on. Between those creeps and the anti vaxxer nazis etc. A pox on them all. It’s very tiresome when MAGA, MAHA and MTG etc take up the mantle of tech criticism because much like conspiracy theorists they go about it the wrong way, which net helps those guys. I’d like to see them laugh off people like Becker as easily.

*: If you leave out contentious areas. I am reading a book by Adam Becker called “What is real?” about an area of physics I know nothing about, quantum physics. It outlines how contentious The Copenhagen Interpretation was. In contrast to the tech creeps, I like how Becker thinks. He is refreshingly cult “aura” free. He reminds me of normal scientists.

I dislike Liu Cixin’s worldview but my one sentiment wrt the types of people in those sciences is summed up by the pacifist in three-body-problem. To other scientists or doctors I would like I would have said “Run and avoid those people. It’s not the Ai -that would be like these buggy Ai agents. No amount of theft can make those things not shit. It’s the humans. Avoid them and their influence because they take over and destroy everything. It’s not the tech. It’s the mind games.

In the 14 years since I have seen that trash, Roe v Wade has been overturned, the environment and democracy have deteriorated and life has become worse in every material and immaterial way. I tried to warn my colleagues, but I was too incoherent.

The overreach of the Trump admin could be a turning point (not of the Charlie Kirk type) wrt avoiding those technofascists and their sleazy neoliberal pitches.

Seriously don’t fall for them again. They have damn near destroyed publicly funded science, all regulation, women’s rights and civil rights. Their notion of nuance and middle ground is extremist rubbish. It is the worst kind of syncretic. Most of us get nuance and complexity anyway. We don’t need the shit these guys are selling. They are lame and daft but that doesn’t mean they are not dangerous. These were the most hellish years of my life and never again.

They pervert everything they influence.”

I know it sounds ott ![]()

Just trying to be a public spirited human.

Emile

(39,863 posts)mr715

(2,522 posts)Emile

(39,863 posts)It was tough back in the 50's and 60's.

Kids nowadays have it too soft.

![]()

mr715

(2,522 posts)I never used a slide rule, but I imagine they were treated like calculators were treated when I was in school. All the way back to abacuses...

Emile

(39,863 posts)Torchlight

(6,232 posts)I used it to spell "BOOBS" upside-down once when I was in fifth grade. Got a lot of laughs from other fifth-graders.